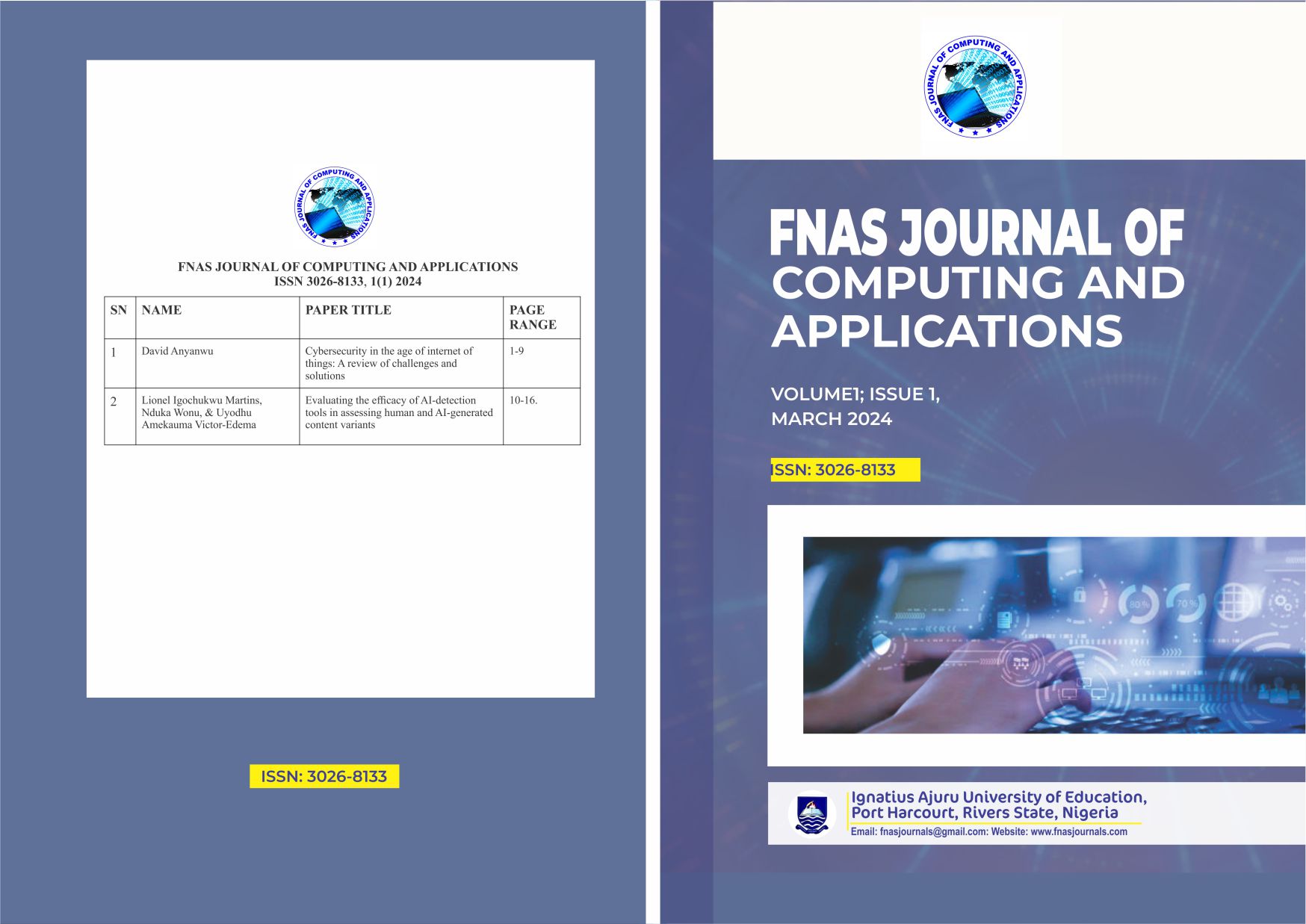

Evaluating the efficacy of AI-detection tools in assessing human and AI-generated content variants

Keywords:

Artificial Intelligence, AI-Detection Tools, AI-Generated Content, Human-Written Content, VariantsAbstract

Artificial Intelligence (AI) is increasingly being utilized in various aspects of life, including research writing, enabling researchers to employ AI tools for generating texts, data analysis and pattern identification in a large dataset. This study evaluates the efficacy of AI-detection tools in assessing human and AI-generated content variants. Specifically, 15 human-written and 15 AI-generated documents were examined. Eight (8) free AI-detection tools were randomly selected to rate the documents. Through analysis of human-generated content, the study found that all AI-detection tools performed well, with mean ratings indicating consistent accuracy in detecting content authored by humans. Statistical analysis confirmed no significant difference among the tools in their mean ratings of human-generated content, suggesting equal proficiency across the board. Conversely, in assessing AI-generated content, significant variability in tool performance was observed, with some tools demonstrating higher effectiveness than others. This variability highlights the importance of understanding various tool capabilities in differentiating between content types. While tools show consistency in detecting human-generated content, their performance in identifying AI-generated content varies considerably. Addressing these challenges and leveraging AI technology's strengths can enhance content evaluation and verification processes.